Does the amount of L1, L2, and L3 cache placed on your CPU matter?

The capacity and transfer speed of L1, L2, and L3 cache memory are the primary distinctions between them. L2 is slower but has more storage space than L1, which has low capacity but is very fast; L3 is the slowest of the three but often has the largest storage capacity.

A multi-level cache memory system is used by all modern computer processors, enabling data to be temporarily stored on the chip for easy access. If you want to get the most out of the next CPU you purchase, it can be helpful to understand the distinctions between L1, L2, and L3 cache.

What is CPU cache memory?

Processor On the processor, cache memory is short-term data storage. It is used to boost the CPU’s processing performance by providing quick access to small, often requested amounts of data. Cache memory is made up of various storage tiers. These levels differ in location, pace, and size and are generally referred to as L1, L2, L3, and occasionally L4.

We must examine the advancements in processor and RAM growth in order to comprehend the necessity for the CPU to have its own memory cache. In early computers, there was not much of a speed gap between the processor and the RAM. There was minimal fear that the RAM would cause processing to lag. There was a need to somehow close the gap between RAM speed and central processor running speed, as the former grew significantly faster than the latter. Cache memory was the solution.

Cache memory is physically near the CPU cores and operates at a very high speed—typically between 10 and 100 times faster than DRAM. Having to make requests for data from relatively slow system memory doesn’t slow down modern, fast CPUs because they can obtain the data from the cache instead.

The cost of this memory type, referred to as SRAM, is the main reason it isn’t simply installed in computers in lieu of DRAM. Compared to modern RAM modules, the cache memory on a CPU is very little, measured in kilobytes or megabytes instead of gigabytes, and producing it at the same size would be prohibitively expensive.

It’s important to distinguish cache memory from other forms of cache that are frequently present on your computer. Cache memory is specific to processor hardware, even though the term “cache” can refer to a variety of temporary memory storage types used to improve the efficiency of software or hardware.

What is L1 Cache?

The smallest and fastest memory level is the L1 cache, often known as the primary cache. Although it is typically 64 KB in size, a quad-core CPU would have 256 KB total because each core of the processor has its own built-in L1 cache.

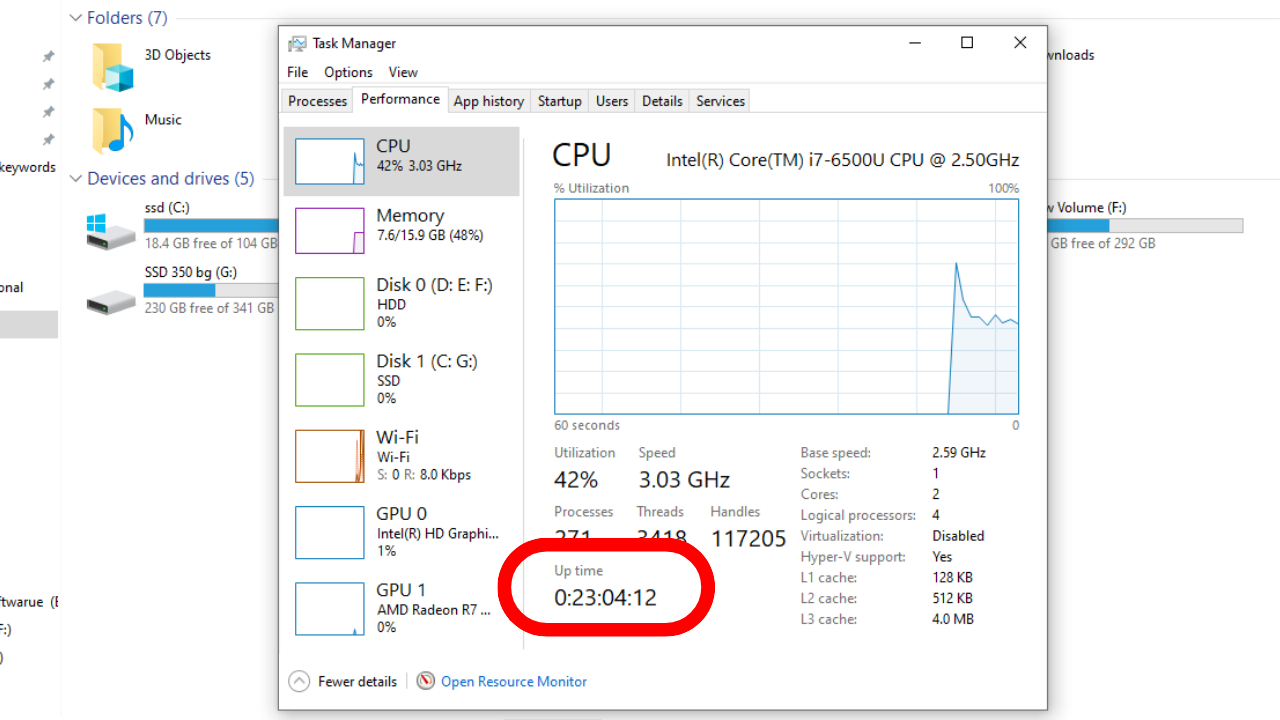

A tool such as CPU-Z can be used to view memory details. It will reveal that the L1 cache is divided into two levels: L1-I (instruction) and L1-D (data). Information to be given to the processor is handled by the L1 instruction cache, while data to be written to the main memory is stored in the data cache.

Level 1 cache is incredibly efficient because it can transfer data at least twice as quickly as the CPU can operate at its highest speed. The CPU searches the L2 and L3 caches for the necessary data if it cannot be found in L1.

What Is It L2 Cache?

The L2 cache is a secondary memory cache that is integrated into every single CPU core. Although it runs slower than L1, it is still far quicker than RAM speed and nearly always has more storage capacity than L1.

While certain high-end CPUs may have up to 32MB of L2 cache overall, the norm is most likely closer to 6–12MB. As previously indicated, this is distributed equally among all cores, enabling independent access to each core’s cache.

What Is It L3 Cache?

As a shared storage pool that the entire processor may access, the Level 3 cache functions as opposed to being integrated into each CPU core. It is the largest of the three memory levels, but it is much slower than the L1 and L2 cache and maybe only twice as fast as RAM.

The CPU must request the necessary data from the slower system memory if it cannot be found in the cache memory. We refer to this as a cache miss. L3 cache was added, which decreased the possibility of a miss and enhanced performance.

L3 cache was originally designed and was frequently housed on a different motherboard chip. L3 cache is now nearly always included on modern CPUs for increased efficiency.

Which Is Better, the L1 Cache or the L2 Cache?

The three tiers of cache memory differ primarily in terms of size, speed, and location.

Although the L1 cache has a small storage capacity, it can operate up to 100 times quicker than RAM, making it the quickest memory in any computer. Every CPU core has a separate L1 cache, which is typically 64KB in size.

Despite being several times bigger than L1, L2 cache is only roughly 25 times faster than RAM. Every CPU core has its own L2 cache, the same as L1. Commonly, each is 256–512 KB, but they can occasionally reach 1MB.

Although the L3 cache may only be twice as fast as system memory, it has the biggest storage capacity—typically 32MB or more. Although it is not a part of the cores, the L3 cache is typically integrated inside CPUs.

When should I choose a CPU with more L3 cache?

Choosing a CPU with more L3 cache isn’t a one-size-fits-all decision. It depends on various factors like your workload, budget, and other CPU features. Here’s a breakdown to help you decide:

When to prioritize more L3 cache:

- Frequent data reuse: If your applications and workflows involve repeating tasks or accessing the same data multiple times, a larger L3 cache can significantly improve performance by storing this data closer to the cores and reducing trips to slower main memory. This applies to:

- Heavy multitasking: Working with multiple demanding applications simultaneously.

- Content creation: Rendering 3D graphics, video editing, large-scale photo manipulation.

- Scientific computing: Complex simulations and calculations involving extensive data access.

- Gaming: Particularly games with large open worlds and complex AI, where repeated scene and asset loading benefits from larger cache.

- Limited core count: If your CPU has fewer cores (e.g., 4 cores), a larger L3 can compensate for some potential limitations in parallel processing by keeping relevant data readily available for each core.

- Memory-intensive tasks: While not a substitute for sufficient RAM, a larger L3 can alleviate some pressure on main memory if your work involves handling immense datasets.

However, more L3 isn’t always the answer:

- Diminishing returns: Beyond a certain size (typically around 32MB for mainstream CPUs), the performance gains from additional L3 become less significant, while cost and power consumption increase.

- Other CPU features: Don’t solely focus on L3. Consider factors like clock speed, core count, instruction set optimizations, and architectural innovations, which can also significantly impact performance.

- Budget constraints: Larger L3 often comes at a higher price tag. Determine if the potential performance gains justify the cost within your budget.

Can Memory Cache Be Deleted?

While some memory caches, like the system or browser cache, can be emptied or erased, CPU cache memory cannot be removed. SRAM is a volatile memory, much like DRAM, meaning its contents are not retained over time. Everything stored in the cache memory is erased the moment the computer is turned off.

How Much Memory Do I Need for Cache?

The more cache a CPU has, as with most forms of memory, the better. It’s crucial to be sure the processor you select has adequate cache memory because it cannot be upgraded. Nevertheless, it relies on how you use your computer, so you shouldn’t obsess about a single CPU characteristic. CPU performance can be influenced by clock speeds, the quantity of cores and threads, and other variables.

64KB of L1 cache per core is a reasonable starting point. By dividing the whole amount by the CPU’s core count, you may get this. It’s completely fine to have 256KB of L2 cache per core, but gamers might want to consider 512KB. Furthermore, for the majority of uses, 32 to 96MB of L3 cache is sufficient.

What are some common cache-related problems, and how can I fix them?

Caches, despite their speed benefits, can introduce their own set of problems. Here are some common cache-related problems and how you can fix them:

1. Outdated data:

- Problem: The cached data is outdated and doesn’t reflect the latest changes made to the source. This can lead to inconsistencies and errors.

- Solution:

- Cache invalidation: Implement mechanisms to invalidate the cache when the source data changes. This can be done by timestamps, versioning, or explicit invalidation messages.

- Cache refresh: Set appropriate timeouts for cached data so it automatically refreshes after a certain period, ensuring updates are incorporated.

2. Cache poisoning:

- Problem: Malicious actors inject invalid or harmful data into the cache. This can lead to security vulnerabilities, data corruption, or system instability.

- Solution:

- Input validation: Implement strict input validation mechanisms to prevent malicious data from entering the cache in the first place.

- Hashing and checksums: Use hashing and checksums to verify data integrity and detect potential tampering before it reaches the cache.

3. Thrashing:

- Problem: Frequent cache misses due to excessive data or conflicting access patterns lead to constant data swapping between cache and main memory, slowing down the system.

- Solution:

- Cache sizing and tuning: Analyze your access patterns and adjust cache size and eviction policies to minimize misses and thrashing.

- Data layout and organization: Organize data efficiently to minimize cache conflicts and improve spatial locality.

- Algorithm optimization: Consider alternative algorithms with more predictable memory access patterns.

4. Performance bottlenecks:

- Problem: Certain data or operations rely heavily on the cache, causing bottlenecks if it’s unavailable or overloaded.

- Solution:

- Identify hot spots: Use profiling tools to identify data or operations causing cache bottlenecks.

- Prioritize caching: Prioritize caching for critical data and operations that significantly benefit from cache access.

- Optimize data access: Reduce unnecessary cache accesses by optimizing algorithms and data structures.

5. Memory leaks:

- Problem: Cached data isn’t properly released when no longer needed, leading to memory leaks and reduced performance over time.

- Solution:

- Garbage collection: Implement robust garbage collection mechanisms to identify and reclaim unused cached data.

- Cache eviction policies: Utilize appropriate eviction policies like least-recently-used (LRU) to automatically remove outdated data.

- Monitoring and logging: Monitor memory usage and cache performance to identify potential leaks and take corrective action.

Remember:

- Diagnosing and fixing cache-related problems requires careful analysis of your specific system and software behavior.

- Experimenting with different solutions and monitoring the results is crucial for finding the optimal approach.

- Balancing cache utilization with efficient memory management and data integrity is key to maintaining a healthy and performant system.

what are the software tools to monitor and analyze cache performance ?

Choosing the right software tool to monitor and analyze cache performance depends on your specific needs and system environment. Here are some popular options across different platforms:

Linux:

- perf: A powerful command-line profiler that provides detailed information on CPU caches, including cache misses, hits, and access latencies.

- Cachetop: A real-time graphical tool that displays cache activity statistics, like hit/miss ratios, for different cache levels.

- Valgrind: A suite of memory debugging tools, including “cachegrind” which analyzes cache usage and identifies potential bottlenecks.

Windows:

- DbgView: A free utility that offers real-time monitoring of system activity, including cache operations and I/O requests.

- RAMMap: A memory visualization tool that allows you to inspect cache usage and identify potential memory leaks.

- Process Explorer: An advanced system performance monitor that provides detailed information on individual processes, including their cache utilization.

In the Concluding lines…

In conclusion, cache memory is a crucial component in modern processors, significantly impacting CPU performance by providing quick access to frequently used data. While cache size does matter and work process, determining the ideal cache memory amount isn’t within a user’s control. The choice of a processor should be based on a holistic assessment of its overall performance, taking into account benchmarks and real-world usage scenarios.